Red Herring Mystery Morph

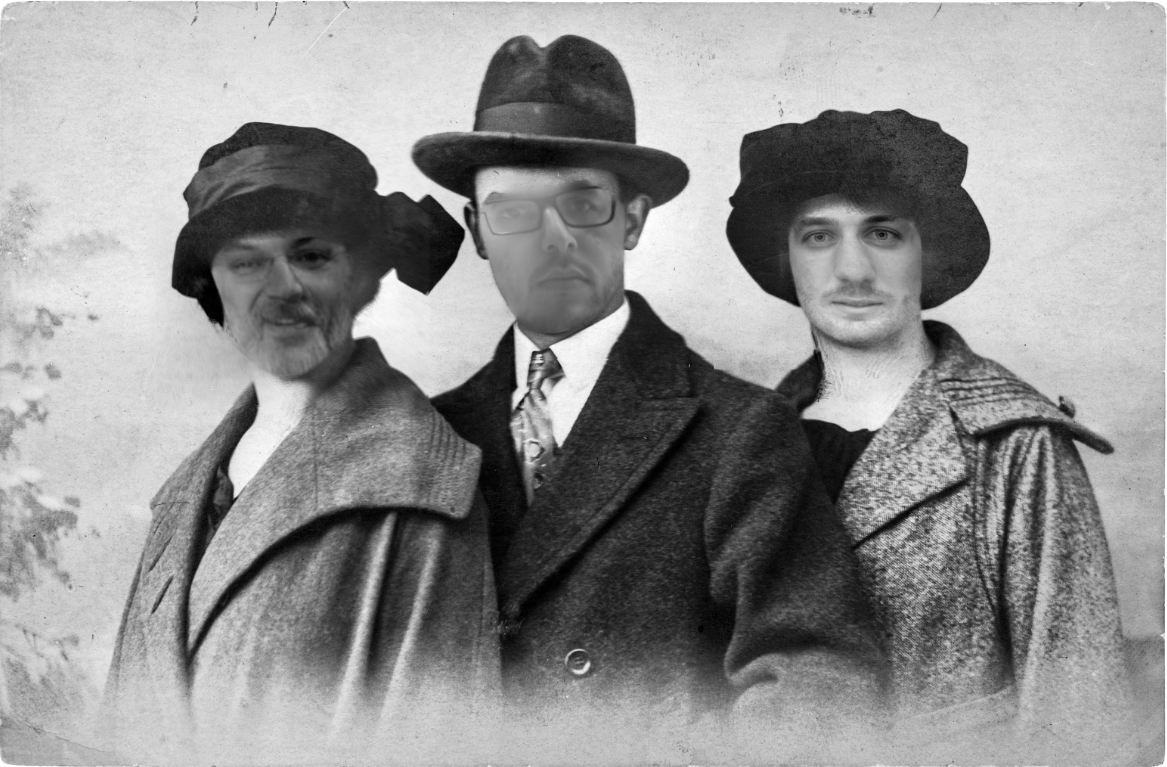

This work represents a face-swapping gag for 5Wits adventures. A team of 5 designed a challenge for 5-Wits’s new “Mystery Manor” adventure, an escape room type of live-action entertainment with environments, special effects, and storytelling. The gag we created uses hidden cameras take photos of participants, processes images to narrow down a user’s face, face swaps the face onto a Victorian subject, and displays that picture in an old, crackled frame to complete the mystery experience.

The Mystery Morph user experience aims to shock 5wits participants with a picture of their face swapped onto a victorian subject's body. Unknowingly, hidden cameras in the 5wits manor will take photos of them, and then process those images to narrow down on a user's face. Using face swapping alogorithms, a central computer in the myster manor will morph the users face onto a victorian subject, and then display that picture in an old, crackled frame to complete the mystery experience.

This gag can amplify the 5wits adventure in many ways. One of the main add-values is in the gag's modularity - with just a USB webcam and a rasberry pi, 5wits can insert hidden cameras in an array of mystery manor objects. Another add-value is of course the experience itself: the notion of seeing one's face displayed another person's body provides the shock and 'wow' factor characteristic of the 5wits enterprise. Notably, the face detection and swapping alrogithm also has the capability of taking numerous faces and pasting them onto a photo with numerous subjects, further amplifying the experience. Finally (and perhaps most importantly) 5Wits can monetize the experience with digital or physical momentos of the faceswap images, serving as an additional revenue stream for 5wits and allowing the participatns to re-experience the magic with family and friends whenever they please.

Design

Click to read more...

Software Schematic

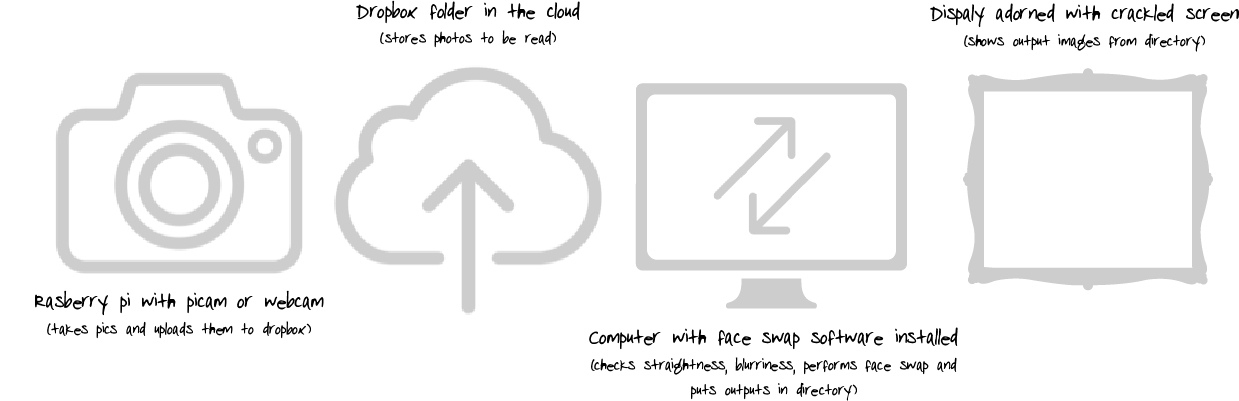

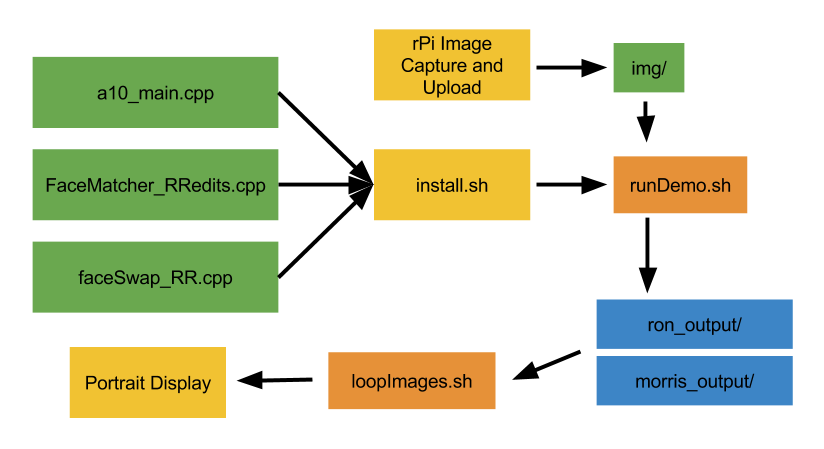

The main demo script is a bash script that runs three different c++ programs. First, it checks the Dropbox folder and obtains the last image submitted by the Raspberry Pi. It checks this image for a face using FaceMatcher. If it does detect a face, it creates a .txt file with the location of all the feducial markers of all the faces present in that image. If feducial markers are created, it then runs FaceSwap, which first checks to make sure the face is not blurry and looks straight ahead, and then swaps the captured face with the target Victorian model. Once the face swapped image is created, the bash script calls FacePaint to algorithmically paint the new image. If all these steps occur, then the bash script replaces the displayed portrait image with this new image. If any of the steps above fail in the workflow, the displayed image on the frame does not update.

The camera module is a Raspberry Pi that runs a bash script upon start-up. This bash script not only loops an animation in full screen, it also controls the pycam to take a picture every second. The Raspberry Pi is not actually detecting any faces. As soon as it snaps a picture, any picture, is uses a Dropbox script that pushes the image to a specific location inside a Dropbox folder. The Raspberry Pi will continue to snap pictures and send them one by one as long as it is on.

Software

Click for more nitty gritty software details...

In order to demonstrate our gag, it was important to develop a software implementation that effectively showed off the potential of our gag while also being useful in the future without significant modification. A video demo of our implementation is shown above.

In this example, an image of our participant is captured through a two way mirror. We've also added an LCD screen behind the two way mirror so that the participant can line up a mustache on the image of their face. Unfortunately, the display was at a different depth than their face image, so many people would close one eye to reduce the "double image" effect produced by two-eye stereo parallax. In practice, this method or some other image capturing technique could be employed to covertly capture an image of the participant.

After the image is captured, it is sent to a display computer where the face swap is calculated. This is done seamlessly using dropbox, the raspberry pi (rPi) saves the current frame to a file every second. The file name is incremented by one for each capture, so subsequent captures do not overwrite old images. The saved image folder is synced to dropbox, so images are easily accessible over the internet for any computer using the same dropbox account. We decided to use dropbox because it was easy to implement, extremely scalable (many rPis could contribute images at the same time with coded filenames), and a feasible option for a real installation at 5wits.

The program flow listed above is a block diagram showing how the subcomponents come together to realize our implementation. There are three primary steps needed to achieve the gag.

1. rPi Image Capture: The rPi is capturing an image every second, saving the result to a folder accessible over dropbox.

2. Image Composition: For our gag, we used a Macbook Air connected to the network to perform the image processing on images coming in over dropbox from the rPis.

3. Image Display: The Macbook Air was connected to an external LCD monitor, and displayed the composited, final images.

There are three separate binaries written in C++ that are called sequentially using shell (bash) scripts. Each of these three binaries takes an image as input, and applies some additional transformation to realize our effect.

FaceMatcher_RRedits : This program detects a face and determines the pixel location of known facial landmarks, or fiducial points. There are 68 markers in total, such as edges of the cheek and lip shape. The program outputs a text file with the pixel locations of these markers.

FaceSwap_RR : After the face points are found, the faceswap program uses a source image and the new frame as input and merges the faces into a single frame using their corresponding text files (see previous sections to see how this is done). The text file name is assumed to be the same as the image files with an additional extention ".txt" added to the end. This program outputs the composited image file to "src/ron_output".

a10_main : Finally, after the face swapping is done, a painting filter is optionally applied. This program takes a file in "src/ron_output" and adds a brush filter, saving the result to "src/morris_output."

Each of these programs can be called independently, and each take files saved to disk as input. This makes it easy to use dropbox or other methods to move files and the modularity makes adjustments simple. We add an extra layer of automation through a series of bash scripts that call each program in the correct order and manage the file saving and naming. The standard script is called "runDemo.sh." This script takes the latest input image captured by the rPi, runs the set of processing steps (without the brush filter for speed), and displays the latest image using Preview (on mac). Some of the scripts also display the result automatically, cycling through previously captured images (such as "loopImages.sh").

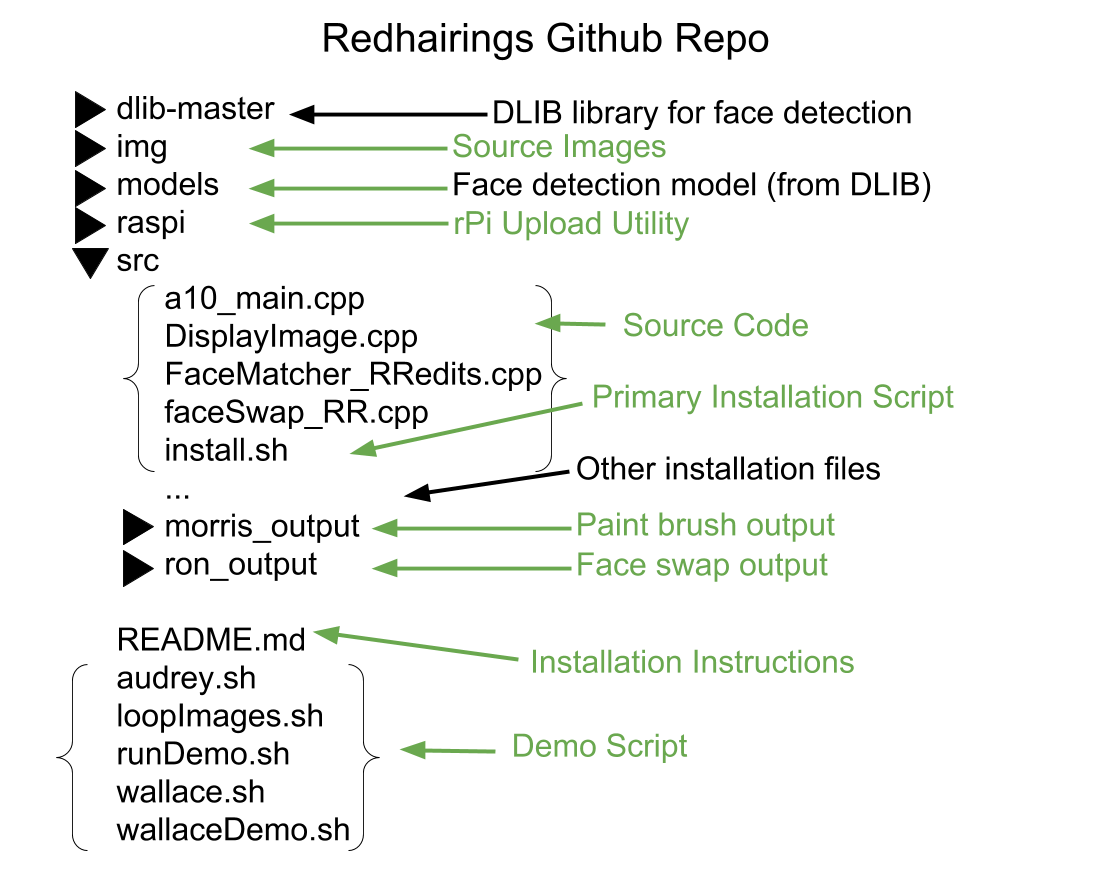

The source code was developed using the source code management program git, and can be accessed on github. The file system of the source code is used to save all images and is a common directory structure for the scripts. The primary directories of interest is the "src" directory that contains source code, the set of scripts in the parent directory, and the "img" directory that contains example source images as well as a good place to put new images for processing. The code used by the rPi to capture images and save to dropbox are also included in the directory "raspi".

Installation instructions are included as part of the github readme. Since every team member used computers with Mac OS X, we decided to include installation instructions for Mac. These instructions should be very similar for other OS's with a similar UNIX-like shell (Linux, cygwin on Windows). We assume you know basic terminal commands for navigating to different directories in the file system using "Terminal" in Mac OS X. The build script "install.sh" in the "src" directory contains all the compilation commands needed.

After installation, you can try your first pass of processing. First, open terminal and navigate to the code parent directory. We will use the "$" symbol to represent the terminal prompt, the following characters should be typed into the terminal.

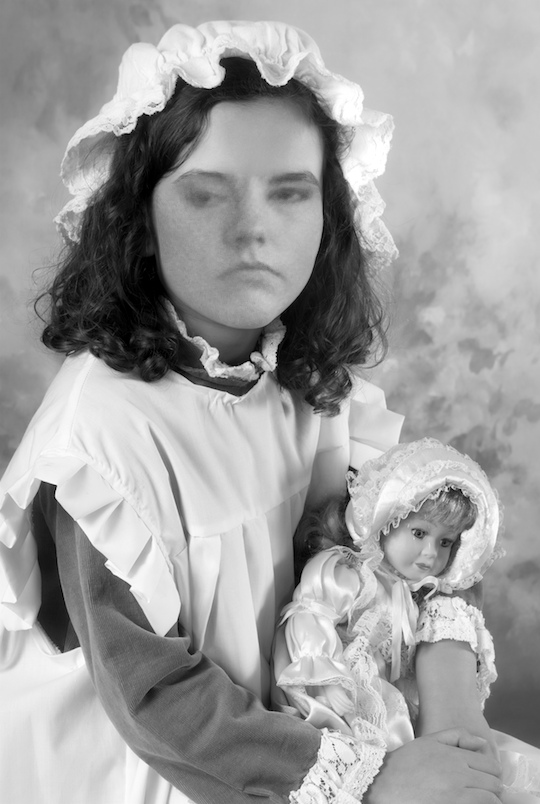

$./runDemo.sh img/vic_crop_cropped.png

This will produce an image of a child with a victorian woman's face in "src/ron_output/". By default, the source image (the template a new image is placed) used is an image of a child holding a doll. This can be changed by replacing the string "img/child1_cropped.png" in the line that starts "./src/build/faceswap_RR ..." (as of writing line 40) of runDemo.sh with your desired source file name. It is important to note that in order to make this work, the source image should first be used as a target to generate the landmark .txt file first.

The other scripts in the parent directory demonstrate other tasks:

audrey.sh - Show an image of a compiled face image of Audrey and victorian photo to start off the demo.

loopImages.sh - Loop through precaptured images in Preview for purposes of our demo.

runDemo.sh - Update images in a loop as described above.

wallace.sh - When key is pressed, show latest captured images buffered in the background so image of wallace could be shown an cue during the demo.

wallaceDemo.sh - Show an image of a compiled face image of Audrey and victorian photo to start off the demo.

Hardware

Click to see how we built it...

Hidden Camera

The hidden camera is the first step in the gag, and it is important that it is both well hidden and in a place where guests are likely to stop and interact with part of the experience. In our case, we decided that a one-way mirror was the easiest way for us to reliably capture faces before our demo.

One-Way Mirror

The one-way mirror was constructed out of a frame and a sheet of acrylic we covered with BDF S15 Window Film One Way Mirror. We hid the camera behind the mirror, and attached black fabric to hide it.

We wanted people to interact with the mirror so we could capture their faces, so we placed our setup at the top of the stairs, where everyone would have to pass by. In addition, posters with details about the day's schedule flanked our mirror and made it more likely that guests would interact.

Animation

While a mirror was a good solution for hiding a camera in Endicott House, where we were constrained by not damaging the structure and furnishings, the mirror alone was not enough to attract guests to the hidden camera. To encourage guests to interact with the mirror, we made an animation to play on a monitor behind the mirror. The light from the monitor shone through the mirror so that a floating monocle and mustache welcomed guests and enticed them to play with the mirror.

Portrait

Monitor

We used a desktop monitor to display the images. For the final presentation, we used a DELL monitor rotated to landscape mode. However, we realized the light was polarized when viewed from 'below' after we had installed it, so it was difficult to see the image from a wide angle on one side.

Surface Texture

The surface texture is the most important feature of the physical portion of the gag. In order to make the portraits look like real oil paintings, we used a polycarbonite sheet, cut to the monitor screen size and painted with a crackle finish. During the design process, we tested several different surface finishing methods including ModPodge, Crackle Glaze, and even thin tissue paper. We used Tim Holtz Distress Crackle Paint (Clear Rock Candy) over a thin layer of mod podge. Krylon dulling spray was used to diminish the glare from the polycarboniate.

Display Wall

For the final presentation and demo, we built a wall to display the portrait. We made a frame with a stand that would support the computer used to do the face swapping, and we cut a hole in the plywood surface that allowed for a press fit with the display monitor.

We chose a victorian wallpaper and built sconces that would house tealights to create a soft glow around the edge of the painting. This sort of effect is particularly useful when lighting conditions are more controlled.

Moving Forward

More moving forward ideas...

Software

Brush Strokes

Although only displayed on the first image of our class demo, we have also created the ability to turn newly formed face swap image into a painted image. This algorithm that turns a photograph into an algorithmic painting is called FacePaint. At a high level, FacePaint creates a painting by applying paint brush strokes across an image, varying the orientation and color of each brush stroke depending on the content of the image. The variables that can be altered are (1) the number of brush strokes, (2) the type of brush stroke, and (3) the amount of noise, which is an importance mapping created by running a gaussian filter over an unsharpen mask.

Multiple Faces

Our current face detection algorithm actually creates an array of all the faces found in an image submitted. The demo only emphasized a single face swap to show the clarity and detail that can be obtained on one portrait frame. However, the software is easily expandable to include multiple faces, in both the source and target image. That is, in addition to swapping out one detected face onto a Victorian model, we can also populate a target image with multiple faces. These multiple faces can come either from multiple source images with a single person in each image, or from one source image with multiple people.

Hardware

Hidden Camera

Our demo hid a pycam behind glass that was coated with a thin layer of silver to make it a one way mirror. The one way mirror, however, was only one example of how 5Wits can implement this hidden camera. The module only requires a Raspberry Pi, either a pycam or USB cam (we recommend a pycam for its discreet size and picture resolution), and a dongle to connect to Wifi. Once powered through an outlet, the hidden camera can be located anywhere inside the adventure that seems fit. We recommend in a well lit room, earlier in the adventure to provide enough time to capture several images and present the gag towards the end of the adventure. Some examples include placing the camera inside a chest that participants have to open, behind a monitor that participants will stare at for a while, or inside narrow passageways where 5Wits has confidence the participants all face one way.

Lighting

In photography, lighting is king. The pycam is very sensitive to light, but its settings can be changed to obtain appropriate images for face swapping. Because of this, we suggest 5Wits not only have good lighting, but that the camera modules are placed in a room with controlled lighting. A big tip is to place the camera next to bright lights. Participants will then have a hard time seeing the camera, as well as be well lit when the camera snaps a picture.

Multiple Portrait Frames

The ultimate goal of this demo is to extend it to an adventure that has a Hall of Portraits. Each portrait would be one frame like the one we demoed. Having as many portraits as the max number of participants allowed ensures that when a large group enters the adventure, each participant will then see their face towards the end on top of a Victorian model. For smaller groups, only a few of the portraits with will have face swaps, while the rest show standard target images. We encourage 5Wits to be creative in this approach; using less frames by using images with multiple characters.

Sample Images